Input Lag measurement using a slow high-speed camera: achieving millisecond accuracy with piLagTester

The piLagTester is a cheap ($5) DIY input lag tester based on a Raspberry Pi Zero using any camera capable of recording video at 60FPS or higher. This post details how to get 1-2ms resolution even if you only have a 60FPS camera. If you are not familiar with the piLagTester read the overview first.

The basic procedure is to load the video into an editor that lets you advance frame by frame. I use shotcut, but there are many. Sadly, none that I have found for android that let you advance and backup by single frames.

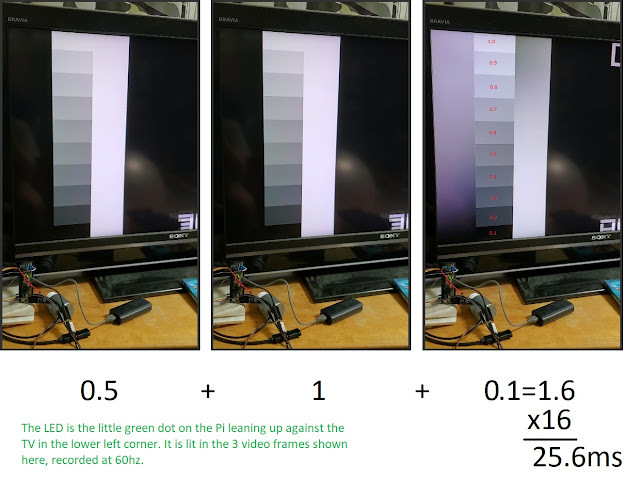

Search thru the video until you find the frame where the Pi LED first lights up. This is the moment when the frame showing the target white bar has been fully sent over HDMI to the monitor. Now we count frames until the target takes to show up. Advance frame by frame until the white probe first appears at the top of the screen (it's the tall white/gray bar in the 3rd frame under under the "word" BRAVIA). In this example that's 2 frames of delay. That times the length of the camera frame (1/FPS =16ms) tells you the lag (32ms), with an average error of 1 frame length. If the camera is very fast this is plenty good enough, for instance a Samsung S9 running at 960fps will only be off by about 1 ms.

With a slower camera 1 frame of error might be intolerable. But it's possible to do almost as well as a high speed camera using some math. If you have a 240fps camera just skip the following unless you like math.

Let's try the same example with a 60fps camera (60fps=60hz=16ms between frames). We extend the same counting method, but now with partial frames. The first frame where the LED lights up counts for 1/2 a frame because we don't know if it lit up at the beginning or end of when the frame was captured. So we assume halfway.

Each additional frame with no white bar counts for 1 full frame, as does the frame where the white bar appears. But that last frame is going to be an overestimate because we know the display started drawing before the frame was captured. We can estimate how much by measuring how far down the screen the bar reaches. The further down the screen, the further back in time. In this case it's about 90% to the bottom, so we can subtract 90% of the length a monitor refresh cycle, giving the following calculation: (0.5 +1 + 1) x 16 - (0.9 x 16). Here the refresh rate and the camera are both 60hz, so we can combine the addition before multiplying, giving (0.5 + 1 + 0.1) * 16 = 25.6ms. This compares quite favorably to oscilloscope based measures of this monitor's lag time, between 23ms and 24ms.

Confused? Let's consider another example also with a 60fps camera and a 60hz monitor.

At the top we have a representation of the frames captured by the camera. The timeline shows the moment in time those frames are captured (we'll assume instantly, or close enough if you orient your camera properly).

On the first frame the LED is off 10ms after the previous frame the LED turns on. Since we have no way to measure this, we just assume it's on half the frame, so we count 0.5 frames. Then we have 1 full frame where the LED is on and the target does not appear. On the next frame, at 48ms, the target bar is seen in the camera's frame, but only 25% of it. We include the full final frame in our count, and multiply the count times the camera's frame length: (0.5 + 1 + 1)x16ms = 40ms. This is of course an overestimate. To correct we subtract the amount of time the target was visible when the final frame was captured. Here that's 25% of the time it takes the monitor to draw from the top of the screen to the bottom, aka the refresh rate. So we would subtract 0.25x16ms, giving the final answer of 40ms-4ms=36ms.

Here are some examples worked out:

75hz display, 120fps camera, LED on 2 frames and then on the 3rd 30% of the target appears:

(0.5 + 1 + 1) x 1/120 - 0.3 x 1/75

120hz display, 240fps camera, LED on 3 frames and then on the 4th 80% of the target appears:

(0.5 + 1 + 1 + 1) x 1/240 - 0.8 x 1/120

Using this method the error should be relatively low, on average no more than half the framerate of the camera. This is because we don't actually know when the LED turned on within the frame and have to guess halfway. A 60hz camera would give 8ms of error, and a 120hz camera would give just 4ms. But that's on average, the worst case is certainly possible (1 full frame off). The solution is to take a longer video and record 5-8 presentations of the target bar and then average the estimates. This seems to give a resolution of 1-2ms.

Why: long answer. Because of the magic of averages the final estimate can actually be more precise than the average error, assuming the error is random. Imagine that we measured 16 times, and by luck measured the spacing between the target onset and the camera frame capture evenly in 1 ms increments. It's easy to see that our estimate that LED turned on exactly halfway between captured frames would be too high 8 times and too low 8 times. But on average it would be exactly right. Of course we don't have to make 16 measurements, just enough that the average error is near zero. 5-8 seems to be about the right number.

Why: short answer. Just like any scientist you need to take multiple measurements because no one measurement is going to be perfect. What, you didn't realize you were a scientist? That's right, owning a piLagTester is all it takes, skip grad school and jump straight to the fame and fortune that a PhD confirs. And, since the piLagTester is a DIY project, grant yourself an honorary BS in engineering while you are at it (degree granting is one of the privileges of having a PhD).

Comments