Variable input lag: displays that don't synchronize drawing with vsync

While developing the piLagTesterPRO I've come across at least 13 TVs that have highly variable input lag, leading to confusing results and potential disagreement with other published input lag measurements. While the symptoms vary, in all cases the issue traces back to how the TVs decide when to start drawing the video input, and it can introduce up to 16ms of variability every time you power cycle the TV or video source. Some of the TVs also drift over time.

A few more variants of this

TL;DR: the piLagTesterPRO now has a tool to check for this issue, called vsyncTest. Scroll to the bottom to see how to use it, or continue reading for a deeper explanation.

What's vsync?

Briefly, part of the video signal is known as vsync, and it proceeds the start of each frame. In the analog days CRTs waited until the end of vsync and then immediately started drawing the "pixel" data received over the wires or airwaves. They had zero buffer to save incoming values so the only option was to draw it the instant it arrived.

Modern video signals (such as HDMI or displayport) do not transfer analog values, but they still start/end each video frame with a vsync signal. Modern displays do have significant internal memory to buffer, preprocess, and then eventually draw those video signals. This is largely what causes input lag. You might expect that this buffering would always introduce a fixed delay, such that the TV starts drawing each frame exactly 25ms (or whatever) after the vsync signal was received.

Empirical observations with Real TVs(TM)

But in fact many TVs don't work this way. One reason is if the TV only runs at a single refresh rate, such as NTSC's 59.94hz. If any other refresh rate (such as 60hz) gets fed to the TV there's going to be a problem. While the TV could just go dark for unsupported refresh rates, a "nicer" option is to buffer the input and draw those buffered frames at the rate supported by the TV. This scenario is depicted in the figure below.

Part A) of the figure shows a schematic of what's going on, exaggerated for clarity. The width of each frame depicts how long it takes to send/draw it. Since 60hz frames are shorter than 59.94hz frames they are narrower. The TV starts drawing frame 1 exactly the same time as it receives it, but what about frame 2? To make this clearer in part B) I redrew A) with extra space between frames. With every frame drawn the TV gets further behind the input, which means that the input lag grows. Eventually a whole frame's worth of delay accumulates, which occurs for frame 5 in this figure. By the time the TV finishes drawing frame 4, it's already receiving frame 6, and the only choice is to discard frame 5. So now we get a motion glitch, and the lag now drops down by 16ms (the length of one frame). Since 60hz and 59.94hz are actually quite close, in reality this takes much longer than 5 frames. Here's an actual example from an Emprex 3202HD:

Input lag is shown in blue. It starts out at about 35 ms and over the period of about 16 seconds it grows to about 51ms before abruptly jumping back down to 35ms by dropping a complete frame of input.

So what's the input lag then?

There's a philosophical question as to what single "input lag" number best represents this kind of screen. I've gone for the average (43ms in this example) in my reviews, but a case could be made that variable input lag is much worse than that subjectively. Your brain can adapt hand-eye coordination to a fixed input lag to push buttons a few milliseconds early for events that can be predicted, and this clearly happens in the real world. But when input lag constantly drifts it puts limits on how well you can adapt. Thus even using the slowest input lag (51ms) might actually understate how laggy the TV actually feels.

Even supported refresh rates don't always sync

You'd think that would be the end of the story: on a TV that can't draw at 60hz, you'd see variable input lag until you set the input refresh rate to 59.94hz. But with the proper refresh rate you should experience a constant input lag, and furthermore you'd expect that lag to be the minimum lag possible (35ms in the example above). Sadly I've more than 10 TVs that don't work that way.

Instead these TVs make no attempt to synchronize the start of display refresh with the vsync signal. Instead the TV starts refreshing the LCD panel at some fixed point in time after it turns on. Once there's pixel data in the input buffer it draws that instead of a blank screen, but it does nothing to adjust when the panel refresh starts. So on this sort of TV the input lag varies each time you turn on the TV (or power cycle your gaming console). Sometimes you get a low lag, and sometimes a high amount of lag (the range is always the true refresh rate of the display, which is 16ms for ~60hz).

This might have been a "feature", actually. I own an upscaler/deinterlacer made for people who cared about video quality and had money to spare: the DVDO VP50. It cost $2500 new, and by the time I purchased it used 10 years after being discontinued it still cost me $150. One of its features (thankfully togglable) is to generate its own fixed output refresh rate at 59.94hz independent of the input. In the DVDO manual they explain this allows for a "smoother/quicker" transition between inputs. Well, not everybody is a gamer, and not every gamer cares about input lag.

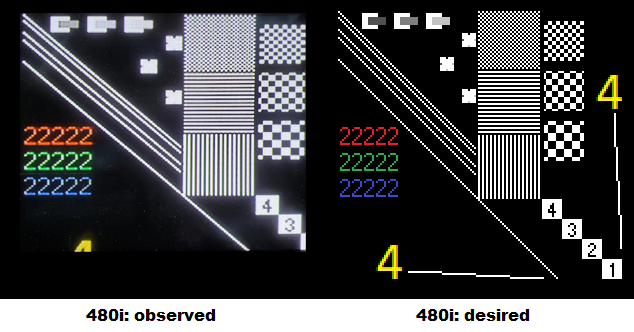

A few more variants of this bug feature

That's the basic story. But I've seen a few variants that are worth mentioning. I tested an LG 42LC2D TV that couldn't even synchronize properly with 59.94hz. Over time it would slowly drift, gaining or losing a frame every minute or so. And with 60hz input it would lose a frame every 16 seconds. Definitely a poor choice for gamers.

I also tested a Vizio VO370M, which was an interesting mix of all of the above. It reports supporting 75hz, but if you choose a 75hz mode it still outputs at 60hz. Thus you have the same drifting lag that I saw with the 59.94/60hz mismatch only much, much worse, with dropped frames every second or so. For 60hz modes it didn't drift, but it had variable input lag, with a new lag value every time I power cycled the TV or the input device. But this did not hold for the panel's native 1080p resolution: it had a fantastic ~3ms input lag that never drifted. This suggests the TV had at least two panel drivers; one based on a TV "core" that handled all the modes a TV might be expected to receive, and one more like a computer monitor, where it could expect the video card to exactly match the resolution and refresh rate preferred by the display.

You can see how prevalent this issue is by looking at my input lag database: the measurements for TVs with this problem are all coded in red. It's about half the list.

Detecting this issue with piLagTesterPRO

If the TV draws 60hz as 59.94hz then it's easy to spot because each lag value reported will be around a millisecond longer than the last. That's part of the reason why I have set the tool to boot in 60hz by default. But as I've discovered, a lot of TVs don't drift constantly, but do vary between power cycles. So I wrote a tool for the piLagTesterPRO that turns it on and off repeatedly, measuring input lag each time. Here's an example command line:

vsyncTest 25 myTVname.txt tv720p

25 is the number of power cycles to test, and tv720p is the resolution that you'd like to test. It takes a minute or so, and then will report all measurements, sorted from smallest to largest. Because it only takes a few measurements per power cycle you can expect a range of 1-2ms between the shortest and longest input lag values, or even 3-4ms at most if the backlight on your TV flickers a lot. But if you have a TV that doesn't sync properly, the range should be on the order of 16ms, with a fairly even distribution. Based on my Vizio experience, it pays to test both native resolution and at least one other lower resolution.

Comments